by crissly | May 9, 2023 | Uncategorized

An LLM cannot write a cohesive, novel-length narrative from start to finish. At present, ChatGPT can produce roughly 600 words at a time, so in order to complete a novel, a human has to feed it prompts and then collage its outputs into a complete story. One prompt might be something like “Describe the death of an author in the style of CBC news.” The next might be “Write Augustus’ response to this death.” The computer can’t keep track of the minutiae of plot and character, leaving holes in the process.

In his afterword to Death of an Author (required reading for anyone who wants to think seriously about the future of LLM-assisted writing), Marche explains this patchwork process of composing the novel. He reread some of the great detective fiction writers, like Agatha Christie, Raymond Chandler, and James Myers Thompson, and employed ChatGPT to produce passages in their styles.

To polish the outputs into more readable language, he ran the text through Sudowrite, another LLM that allows for more stylistic authorial control (making sentences longer or shorter, rephrasing text, etc.) and then used yet another program, Cohere, to generate poetic similes, refining the language even further. Marche’s goal might have been Chandler, but to this reader’s ear, the prose is closer to Dan Brown—compulsively readable, but not in danger of winning the Edgar.

Despite the use of so many different programs and styles, this text has Stephen Marche’s signature all over it. Marche even said as much to The New York Times: “I am the creator of this work, 100 percent.” What’s striking, though, is what he says next: “But on the other hand, I didn’t create the words.”

It’s important to meditate on this renunciation of authorship because it seems central to current misunderstandings about what LLMs are and why they make us so nervous. On the one hand, Marche acknowledges his role in the creation of the text: “I had an elaborate plan … I have a familiarity with the technology … I know what good writing looks like.” On the other, he chose to publish the work under a pseudonym, Aidan Marchine, a portmanteau of machine and Marche. Calling him the author of Death of an Author wouldn’t just be a tongue twister, it would be, according to Marche, “inaccurate as a matter of fact.”

This seems like a missed opportunity. As someone who has just finished writing two novels that incorporate LLM language, I agree with Marche that only a good writer will make anything worthwhile with these programs. Because of this, it seems important to acknowledge the human hand in every aspect of the writing process.

Even the choice to include a particular LLM output over another is a human decision, not unlike the selective reframing employed by artists like Marcel Duchamp and Andy Warhol. Giving creative credit to the LLM seems to turn an elaborate collaboration between a human and a machine into a flashy tech gimmick. And it plays into the hands of forces who claim that writing with LLMs is not “real” writing, nor is it worthy of copyright protection, as the US Copyright Office recently argued.

LLMs are not authors, nor do they possess intelligence. They are simply tools. They are computer programs trained to recognize patterns in how we write and then use these patterns to produce language that appears like conscious and coherent thought. At least for the foreseeable future, these programs do not operate without prompts. They can’t produce text at a random moment out of their own creative inspiration. They can’t prompt themselves to fulfill apocalyptic fantasies and take over the planet. They begin and end with human direction. As such, the material that LLMs produce should be seen as a collaboration between a human author and a machine. The author asks the machine for language and then creatively determines what to do with the machine’s outputs.

It might be helpful to situate Death of an Author not in the tradition of LLM writing, but in the larger field of literary supercuts, or works of fiction made entirely out of found language. While the history of literary supercuts is less known, fiction writers have been incorporating found language for centuries. Al-Jāḥiẓ, a Medieval Arabic author, borrowed plenty from other sources. Herman Melville’s Moby-Dick begins with 13 pages of found whale descriptions.

by crissly | May 9, 2023 | Uncategorized

Simply put, software doesn’t age well. Hardware, in time, always becomes obsolete, but if it survives long enough—think Olympia typewriters—it transfigures from junk to a vintage electronic or gets a shot at a stylistic reincarnation (skeuomorphism or going “retro”). But rarely does anyone reserve the same kind of generosity for a piece of crummy old software. Which is to say, when people hate on software products, the hatred is not the more complex and sticky kind directed to, say, Philip Roth. People really want to see no more of it.

And 17 years after the launch of Google Docs, its adoption is widespread but nowhere universal. If your workplace is filled with Macs more than Lenovos, you might be surprised to learn that Microsoft Word still dominates in market share. If Microsoft Word is like a combo kit of DeWalt power tools, Google Docs is a budget Swiss Army knife that is serviceable but always leaves more to be desired. What baffles me is that the intervening years since Google Docs’ initial launch provided more than enough time to achieve feature parity with Microsoft Word, but it’s as if Google Docs never mustered the will. Instead, it has focused on small-time features (emoji reactions), and recent product announcements (“pageless” format, for example) have strained to surprise.

During those lackluster development cycles, the word-processing space has been filled with a glut of writing apps. Not always successful but boldly experimental, they are more minimalist, maximalist, hipster, thoughtful, annoying, customizable, opinionated, over/under-engineered than Google Docs. To name names, Bear, Coda, Airtable, Notion, Overleaf, Scrivener, iA Writer, Ulysses, and Obsidian come to mind.

Google Docs, though well made, has never felt artisanal the way iA Writer or Ulysses does. But it would be a mistake to insist too much on its lesser aspects. Using OT successfully, once and for all, showed that the complexity of real-time editing could be tamed, an evidence proof to which many collaborative software programs of today owe their existence. OT also forged a path for more elegant collaborative solutions—like, for those who care, conflict-free replicated data types (CRDTs), which are used in domains such as music (SoundCloud) and design (Figma). In the genetics of modern software, it’d be rare to find software programs where Google Docs’ DNA segments are completely absent.

And because the usage pattern of those other writing apps turned out to be more pluralistic—i.e., instead of relying on a single general-purpose app, users employ different apps for quick note-taking (Apple Notes), drafting (iA Writer), scriptwriting (Scrivener), reference management(Zotero)—Google Docs still excels in universality and has achieved a near-protocol status. Google Docs may be second-rate in the second-rate and third-rate features, but it’s first-rate in the first-rate ones. For what it’s worth, this article was edited in Google Docs.

by crissly | May 8, 2023 | Uncategorized

Friction is resistance. In this case, it tells you how hard it is for something to get across the membrane. If you engineer a membrane that has less resistance to water, and more resistance to salt or whatever else you want to remove, you get a cleaner product with potentially less work.

But that model got shelved in 1965, when another group introduced a simpler model. This one assumed that the plastic polymer of the membrane was dense and had no pores through which water could run. It also didn’t hold that friction played a role. Instead, it presumed that water molecules in a saltwater solution would dissolve into the plastic and diffuse out of the other side. For that reason, this is called the “solution-diffusion” model.

Diffusion is the flow of a chemical from where it’s more concentrated to where it’s less concentrated. Think of a drop of dye spreading throughout a glass of water, or the smell of garlic wafting out of a kitchen. It keeps moving toward equilibrium until its concentration is the same everywhere, and it doesn’t rely on a pressure difference, like the suction that pulls water through a straw.

The model stuck, but Elimelech always suspected it was wrong. To him, accepting that water diffuses through the membrane implied something strange: that the water scattered into individual molecules as it passed through. “How can it be?” Elimelech asks. Breaking up clusters of water molecules requires a ton of energy. “You almost need to evaporate the water to get it into the membrane.”

Still, Hoek says, “20 years ago it was anathema to suggest that it was incorrect.” Hoek didn’t even dare to use the word “pores” when talking about reverse osmosis membranes, since the dominant model didn’t acknowledge them. “For many, many years,” he says wryly, “I’ve been calling them ‘interconnected free volume elements.’”

Over the past 20 years, images taken using advanced microscopes have reinforced Hoek and Elimelech’s doubts. Researchers discovered that the plastic polymers used in desalination membranes aren’t so dense and poreless after all. They actually contain interconnected tunnels—although they are absolutely minuscule, peaking at around 5 angstroms in diameter, or half a nanometer. Still, one water molecule is about 1.5 angstroms long, so that’s enough room for small clusters of water molecules to squeeze through these cavities, instead of having to go one at a time.

About two years ago, Elimelech felt the time was right to take down the solution-diffusion model. He worked with a team: Li Wang, a postdoc in Elimelech’s lab, examined fluid flow through small membranes to take real measurements. Jinlong He, at the University of Wisconsin-Madison, tinkered with a computer model simulating what happens at the molecular scale as pressure pushes salt water through a membrane.

Predictions based on a solution-diffusion model would say that water pressure should be the same on both sides of the membrane. But in this experiment, the team found that the pressure at the entrance and exit of the membrane differed. This suggested that pressure drives water flow through the membrane, rather than simple diffusion.

by crissly | May 7, 2023 | Uncategorized

The first is to make sure you’re using the Following tab instead of For You. This tab is just tweets from the people you follow, so it doesn’t artificially favor Twitter Blue subscribers. You could also try blocking all retweets if you want to clean things up a little more.

If that’s not enough, you could try TweetDeck, the power user’s version of Twitter. This tool doesn’t have a For You page or any algorithmic sorting, meaning Twitter Blue users aren’t artificially boosted on it. TweetDeck is the one part of Twitter that’s completely untouched by the recent changes there, and I hope this doesn’t change. (So please, no one tell Elon Musk that TweetDeck still exists.)

Another cool extension, if you miss seeing legacy checkmarks, is Eight Dollars. Right now blue checkmarks only show up if someone is paying for Blue, meaning most people who had a checkmark prior to April 20, 2023 no longer have one. Eight Dollars can show you which users were verified before legacy verified checkmarks were removed, which is useful if you trusted the old system.

There’s a cat and mouse element to all of this, of course. Twitter’s current regime really, really wants to turn Blue checkmarks into sellable status symbols and any tool that diminishes the visibility of Blue subscribers cuts against that goal. They’re going to try to find a way to break this, and all similar, tools. For now, though, they work—use them while you can.

Or you could just abandon your Twitter account, set up a public archive of your tweets, and learn how to get started on Mastodon or Bluesky. Up to you.

by crissly | May 6, 2023 | Uncategorized

This story originally appeared on Yale Environment 360 and is part of the Climate Desk collaboration.

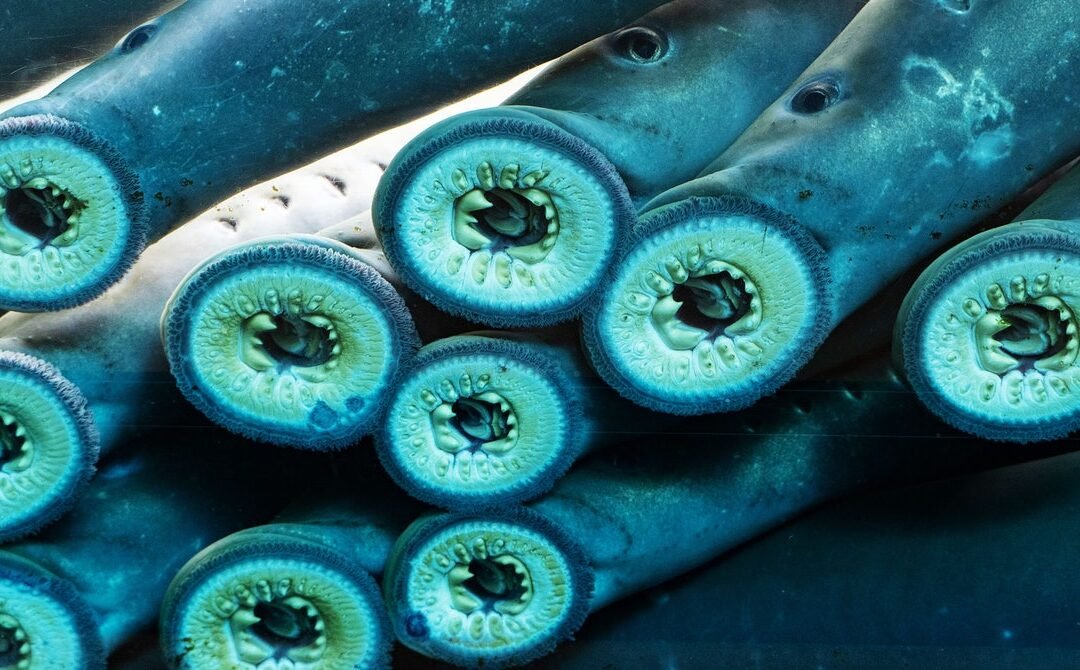

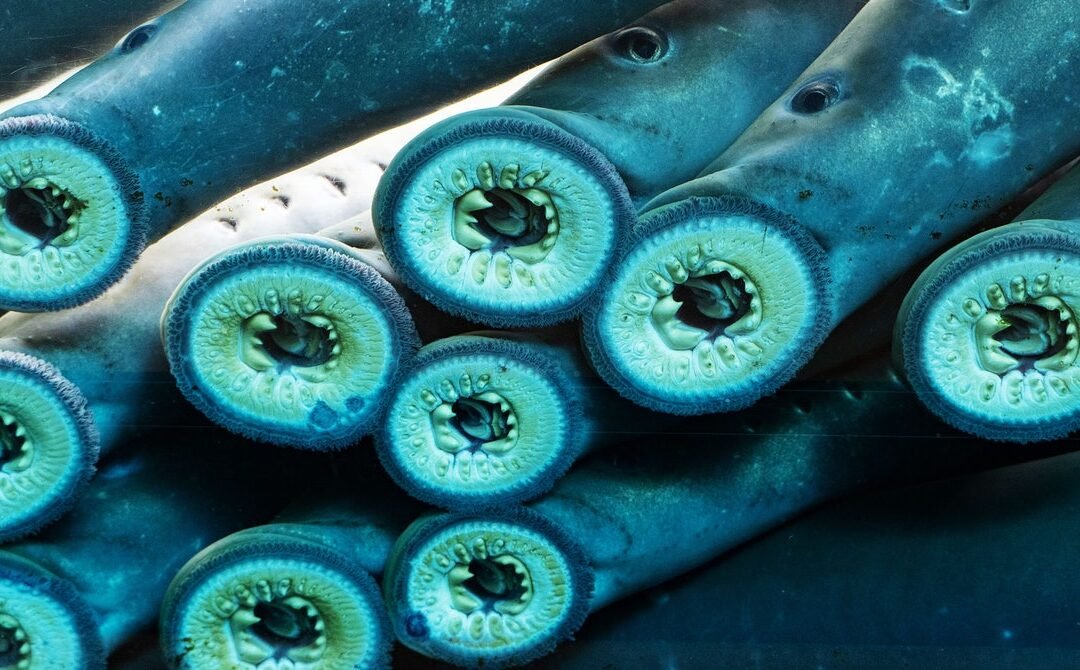

“Thousands of sea lamprey are passed upstream [on the Connecticut River] each year. This is a predator that wiped out the Great Lakes lake-trout fishery. [Lampreys] literally suck the life out of their host fish, namely small-scale fish such as trout and salmon. The fish ladders ought to be used to diminish the lamprey.” So editorialized the Eagle-Tribune of Lawrence, Massachusetts, on December 15, 2002.

If that’s true, why this spring is Trout Unlimited—the nation’s leading advocate for trout and salmon—assisting the Town of Wilton, Connecticut, and an environmental group called Save the Sound in a project that will restore 10 miles of sea lamprey spawning habitat on the Norwalk River, which flows into Long Island Sound?

Why this summer will the first big returns from stocked Pacific lampreys—a species similar to sea lampreys—climb specially designed lamprey ramps at Columbia River dams and surge into historical spawning habitat in Oregon, Washington, and Idaho?

And why, when the canal at Turners Falls on the Connecticut River is drawn down in September, will the Connecticut River Conservancy, Fort River Watershed Association, and the Biocitizen environmental school rescue stranded sea lamprey larvae?

The answer is ecological awakening—the gradual realization that, if the whole of nature is good, no part can be bad. In their native habitat, marine lampreys are “keystone species” supporting vast aquatic and terrestrial ecosystems. They provide food for insects, crayfish, fish, turtles, minks, otters, vultures, herons, loons, ospreys, eagles, and hundreds of other predators and scavengers. Lamprey larvae, embedded in the stream bed, maintain water quality by filter feeding; and they attract spawning adults from the sea by releasing pheromones. Because adults die after spawning, they infuse sterile headwaters with nutrients from the sea. When marine lampreys build their communal nests, they clear silt from the river bottom, providing spawning habitat for countless native fish, especially trout and salmon.

Environmental consultant Stephen Gephard, formerly Connecticut’s anadromous-fish chief, calls lampreys “environmental engineers” as important to native ecosystems as beavers.

Marine lampreys, our elders by some 340 million years, depend on cold, free-flowing freshwater for spawning. They are boneless, jawless, eel-like fish with fleshy fins. They extract body fluids from other fish via tooth-studded suction disks. Both sea lampreys and Pacific lampreys are widely reviled because they are perceived as “ugly” and because sea lampreys decimated indigenous fish in the upper Great Lakes when they gained access to those waters via human-built canals, most likely the Welland Canal that bypassed Niagara Falls. Once there, they nearly wiped out valuable commercial and sport fisheries for lake trout (the largest char species, not a true trout like rainbows, cutthroats, and browns).

By the 1960s, nonnative sea lampreys had reduced the annual commercial take of lake trout in the upper Great Lakes from about 15 million pounds to half a million pounds. In 1955, Canada and the United States established the Great Lakes Fisheries Commission, which controls lampreys with barriers, traps, and a remarkably selective larvae poison called TFM. Lamprey control costs $15 to $20 million a year; and without it, ongoing lake-trout recovery would be impossible, and populations of all other sport fish would crash.