by crissly | Aug 14, 2022 | Uncategorized

There isn’t much we can agree on these days. But two sweeping statements that might garner broad support are “We need to fix technology” and “We need to fix democracy.”

There is growing recognition that rapid technology development is producing society-scale risks: state and private surveillance, widespread labor automation, ascending monopoly and oligopoly power, stagnant productivity growth, algorithmic discrimination, and the catastrophic risks posed by advances in fields like AI and biotechnology. Less often discussed, but in my view no less important, is the loss of potential advances that lack short-term or market-legible benefits. These include vaccine development for emerging diseases and open source platforms for basic digital affordances like identity and communication.

At the same time, as democracies falter in the face of complex global challenges, citizens (and increasingly, elected leaders) around the world are losing trust in democratic processes and are being swayed by autocratic alternatives. Nation-state democracies are, to varying degrees, beset by gridlock and hyper-partisanship, little accountability to the popular will, inefficiency, flagging state capacity, inability to keep up with emerging technologies, and corporate capture. While smaller-scale democratic experiments are growing, locally and globally, they remain far too fractured to handle consequential governance decisions at scale.

This puts us in a bind. Clearly, we could be doing a better job directing the development of technology towards collective human flourishing—in fact, this may be one of the greatest challenges of our time. If actually existing democracy is so riddled with flaws, it doesn’t seem up to the task. This is what rings hollow in many calls to “democratize technology”: Given the litany of complaints, why subject one seemingly broken system to governance by another?

At the same time, as we deal with everything from surveillance to space travel, we desperately need ways to collectively negotiate complex value trade-offs with global consequences, and ways to share in their benefits. This definitely seems like a job for democracy, albeit a much better iteration. So how can we radically update democracy so that we can successfully navigate toward long-term, shared positive outcomes?

The Case for Collective Intelligence

To answer these questions, we must realize that our current forms of democracy are only early and highly imperfect manifestations of collective intelligence—coordination systems that incorporate and process decentralized, agentic, and meaningful decisionmaking across individuals and communities to produce best-case decisions for the collective.

Collective intelligence, or CI, is not the purview of humans alone. Networks of trees, enabled by mycelia, can exhibit intelligent characteristics, sharing nutrients and sending out distress signals about drought or insect attacks. Bees and ants manifest swarm intelligence through complex processes of selection, deliberation, and consensus, using the vocabulary of physical movement and pheromones. In fact, humans are not even the only animals that vote. African wild dogs, when deciding whether to move locations, will engage in a bout of sneezing to determine whether quorum has been reached, with the tipping point determined by context—for example, lower-ranked individuals require a minimum of 10 sneezes to achieve what a higher-ranked individual could get with only three. Buffaloes, baboons, and meerkats also make decisions via quorum, with flexible “rules” based on behavior and negotiation.

But humans, unlike meerkats or ants, don’t have to rely on the pathways to CI that our biology has hard-coded into us, or wait until the slow, invisible hand of evolution tweaks our processes. We can do better on purpose, recognizing that progress and participation don’t have to trade off. (This is the thesis on which my organization, the Collective Intelligence Project, is predicated.)

Our stepwise innovations in CI systems—such as representative, nation-state democracy, capitalist and noncapitalist markets, and bureaucratic technocracy—have already shaped the modern world. And yet, we can do much better. These existing manifestations of collective intelligence are only crude versions of the structures we could build to make better collective decisions over collective resources.

by crissly | Apr 19, 2022 | Uncategorized

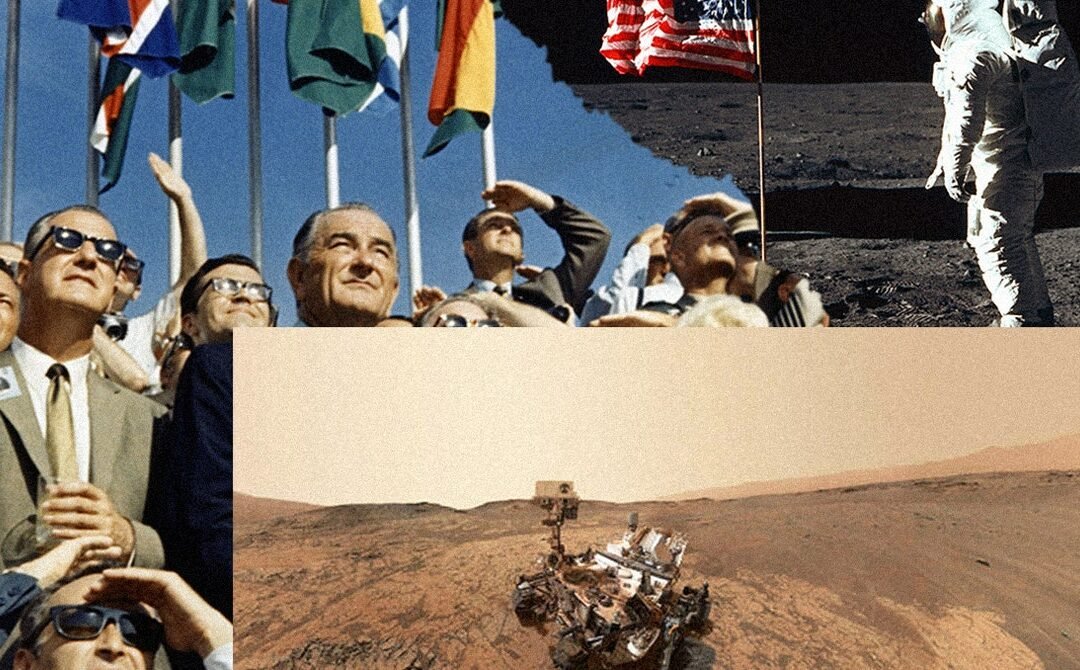

How much do we need humans in space? How much do we want them there? Astronauts embody the triumph of human imagination and engineering. Their efforts shed light on the possibilities and problems posed by travel beyond our nurturing Earth. Their presence on the moon or on other solar-system objects can imply that the countries or entities that sent them there possess ownership rights. Astronauts promote an understanding of the cosmos, and inspire young people toward careers in science.

When it comes to exploration, however, our robots can outperform astronauts at a far lower cost and without risk to human life. This assertion, once a prediction for the future, has become reality today, and robot explorers will continue to become ever more capable, while human bodies will not.

Fifty years ago, when the first geologist to reach the moon suddenly recognized strange orange soil (the likely remnant of previously unsuspected volcanic activity), no one claimed that an automated explorer could have accomplished this feat. Today, we have placed a semi-autonomous rover on Mars, one of a continuing suite of orbiters and landers, with cameras and other instruments that probe the Martian soil, capable of finding paths around obstacles as no previous rover could.

Since Apollo 17 left the moon in 1972, the astronauts have journeyed no farther than low Earth orbit. In this realm, astronauts’ greatest achievement by far came with their five repair missions to the Hubble Space Telescope, which first saved the giant instrument from uselessness and then extended its life by decades by providing upgraded cameras and other systems. (Astronauts could reach the Hubble only because the Space Shuttle, which launched it, could go no farther from Earth, which produces all sorts of interfering radiation and light.) Each of these missions cost about a billion dollars in today’s money. The cost of a telescope to replace the Hubble would likewise have been about a billion dollars; one estimate has set the cost of the five repair missions equal to that for constructing seven replacement telescopes.

Today, astrophysicists have managed to send all of their new spaceborne observatories to distances four times farther than the moon, where the James Webb Space Telescope now prepares to study a host of cosmic objects. Our robot explorers have visited all the sun’s planets (including that former planet Pluto), as well as two comets and an asteroid, securing immense amounts of data about them and their moons, most notably Jupiter’s Europa and Saturn’s Enceladus, where oceans that lie beneath an icy crust may harbor strange forms of life. Future missions from the United States, the European Space Agency, China, Japan, India, and Russia will only increase our robot emissaries’ abilities and the scientific importance of their discoveries. Each of these missions has cost far less than a single voyage that would send humans—which in any case remains an impossibility for the next few decades, for any destination save the moon and Mars.

In 2020, NASA revealed of accomplishments titled “20 Breakthroughs From 20 Years of Science Aboard the International Space Station.” Seventeen of those dealt with processes that robots could have performed, such as launching small satellites, the detection of cosmic particles, employing microgravity conditions for drug development and the study of flames, and 3-D printing in space. The remaining three dealt with muscle atrophy and bone loss, growing food, or identifying microbes in space—things that are important for humans in that environment, but hardly a rationale for sending them there.

by crissly | Feb 16, 2022 | Uncategorized

para leer este articulo en español por favor aprete aqui.

In 2018, while the Argentine Congress was hotly debating whether to decriminalize abortion, the Ministry of Early Childhood in the northern province of Salta and the American tech giant Microsoft presented an algorithmic system to predict teenage pregnancy. They called it the Technology Platform for Social Intervention.

“With technology you can foresee five or six years in advance, with first name, last name, and address, which girl—future teenager—is 86 percent predestined to have an adolescent pregnancy,” Juan Manuel Urtubey, then the governor of the province, proudly declared on national television. The stated goal was to use the algorithm to predict which girls from low-income areas would become pregnant in the next five years. It was never made clear what would happen once a girl or young woman was labeled as “predestined” for motherhood or how this information would help prevent adolescent pregnancy. The social theories informing the AI system, like its algorithms, were opaque.

The system was based on data—including age, ethnicity, country of origin, disability, and whether the subject’s home had hot water in the bathroom—from 200,000 residents in the city of Salta, including 12,000 women and girls between the ages of 10 and 19. Though there is no official documentation, from reviewing media articles and two technical reviews, we know that “territorial agents” visited the houses of the girls and women in question, asked survey questions, took photos, and recorded GPS locations. What did those subjected to this intimate surveillance have in common? They were poor, some were migrants from Bolivia and other countries in South America, and others were from Indigenous Wichí, Qulla, and Guaraní communities.

Although Microsoft spokespersons proudly announced that the technology in Salta was “one of the pioneering cases in the use of AI data” in state programs, it presents little that is new. Instead, it is an extension of a long Argentine tradition: controlling the population through surveillance and force. And the reaction to it shows how grassroots Argentine feminists were able to take on this misuse of artificial intelligence.

In the 19th and early 20th centuries, successive Argentine governments carried out a genocide of Indigenous communities and promoted immigration policies based on ideologies designed to attract European settlement, all in hopes of blanquismo, or “whitening” the country. Over time, a national identity was constructed along social, cultural, and most of all racial lines.

This type of eugenic thinking has a propensity to shapeshift and adapt to new scientific paradigms and political circumstances, according to historian Marisa Miranda, who tracks Argentina’s attempts to control the population through science and technology. Take the case of immigration. Throughout Argentina’s history, opinion has oscillated between celebrating immigration as a means of “improving” the population and considering immigrants to be undesirable and a political threat to be carefully watched and managed.

More recently, the Argentine military dictatorship between 1976 and 1983 controlled the population through systematic political violence. During the dictatorship, women had the “patriotic task” of populating the country, and contraception was prohibited by a 1977 law. The cruelest expression of the dictatorship’s interest in motherhood was the practice of kidnapping pregnant women considered politically subversive. Most women were murdered after giving birth and many of their children were illegally adopted by the military to be raised by “patriotic, Catholic families.”

While Salta’s AI system to “predict pregnancy” was hailed as futuristic, it can only be understood in light of this long history, particularly, in Miranda’s words, the persistent eugenic impulse that always “contains a reference to the future” and assumes that reproduction “should be managed by the powerful.”

Due to the complete lack of national AI regulation, the Technology Platform for Social Intervention was never subject to formal review and no assessment of its impacts on girls and women has been made. There has been no official data published on its accuracy or outcomes. Like most AI systems all over the world, including those used in sensitive contexts, it lacks transparency and accountability.

Though it is unclear whether the technology program was ultimately suspended, everything we know about the system comes from the efforts of feminist activists and journalists who led what amounted to a grassroots audit of a flawed and harmful AI system. By quickly activating a well-oiled machine of community organizing, these activists brought national media attention to how an untested, unregulated technology was being used to violate the rights of girls and women.

“The idea that algorithms can predict teenage pregnancy before it happens is the perfect excuse for anti-women and anti-sexual and reproductive rights activists to declare abortion laws unnecessary,” wrote feminist scholars Paz Peña and Joana Varon at the time. Indeed, it was soon revealed that an Argentine nonprofit called the Conin Foundation, run by doctor Abel Albino, a vocal opponent of abortion rights, was behind the technology, along with Microsoft.

by crissly | Jan 20, 2022 | Uncategorized

The character of conflict between nations has fundamentally changed. Governments and militaries now fight on our behalf in the “gray zone,” where the boundaries between peace and war are blurred. They must navigate a complex web of ambiguous and deeply interconnected challenges, ranging from political destabilization and disinformation campaigns to cyberattacks, assassinations, proxy operations, election meddling, or perhaps even human-made pandemics. Add to this list the existential threat of climate change (and its geopolitical ramifications) and it is clear that the description of what now constitutes a national security issue has broadened, each crisis straining or degrading the fabric of national resilience.

Traditional analysis tools are poorly equipped to predict and respond to these blurred and intertwined threats. Instead, in 2022 governments and militaries will use sophisticated and credible real-life simulations, putting software at the heart of their decision-making and operating processes. The UK Ministry of Defence, for example, is developing what it calls a military Digital Backbone. This will incorporate cloud computing, modern networks, and a new transformative capability called a Single Synthetic Environment, or SSE.

This SSE will combine artificial intelligence, machine learning, computational modeling, and modern distributed systems with trusted data sets from multiple sources to support detailed, credible simulations of the real world. This data will be owned by critical institutions, but will also be sourced via an ecosystem of trusted partners, such as the Alan Turing Institute.

An SSE offers a multilayered simulation of a city, region, or country, including high-quality mapping and information about critical national infrastructure, such as power, water, transport networks, and telecommunications. This can then be overlaid with other information, such as smart-city data, information about military deployment, or data gleaned from social listening. From this, models can be constructed that give a rich, detailed picture of how a region or city might react to a given event: a disaster, epidemic, or cyberattack or a combination of such events organized by state enemies.

Defense synthetics are not a new concept. However, previous solutions have been built in a standalone way that limits reuse, longevity, choice, and—crucially—the speed of insight needed to effectively counteract gray-zone threats.

National security officials will be able to use SSEs to identify threats early, understand them better, explore their response options, and analyze the likely consequences of different actions. They will even be able to use them to train, rehearse, and implement their plans. By running thousands of simulated futures, senior leaders will be able to grapple with complex questions, refining policies and complex plans in a virtual world before implementing them in the real one.

One key question that will only grow in importance in 2022 is how countries can best secure their populations and supply chains against dramatic weather events coming from climate change. SSEs will be able to help answer this by pulling together regional infrastructure, networks, roads, and population data, with meteorological models to see how and when events might unfold.

by crissly | Dec 14, 2021 | Uncategorized

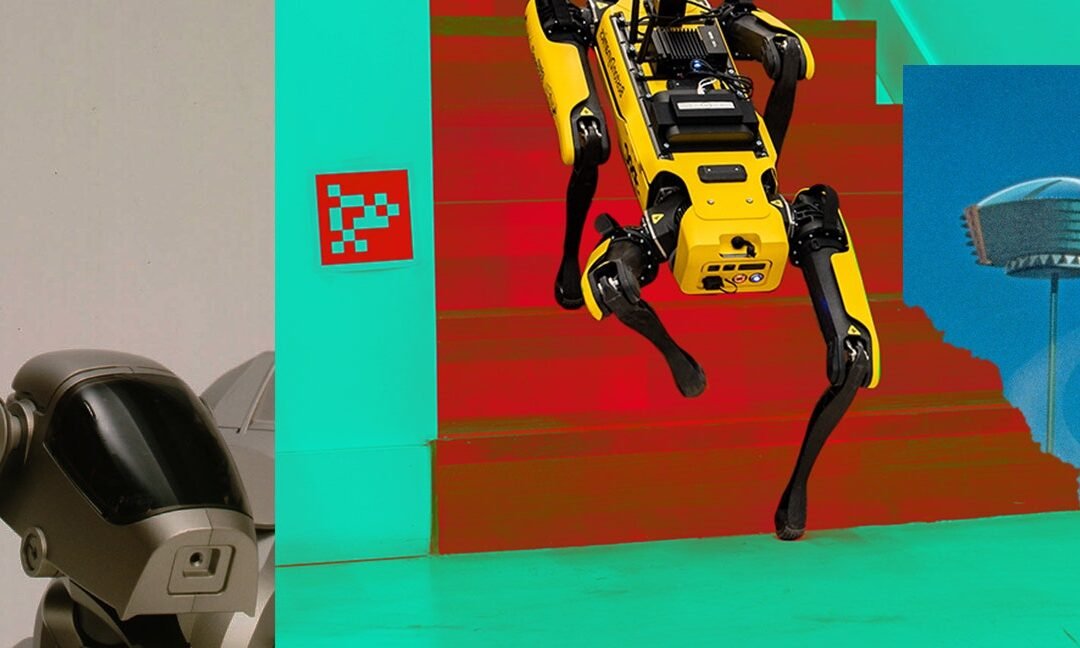

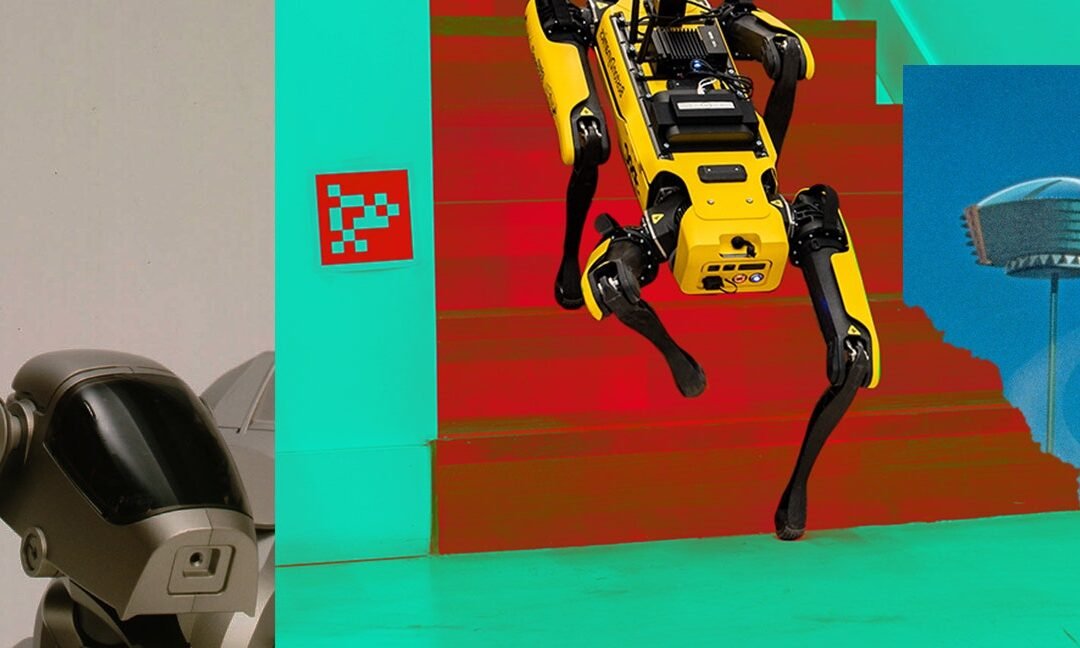

George Jetson did not want his family to adopt a dog. For the patriarch of the futuristic family in the 1960s cartoon The Jetsons, apartment living in the age of flying cars and cities in the sky was incompatible with an animal in need of regular walking and grooming, so he instead purchased an electronic dog called ‘Lectronimo, which required no feeding and even attacked burglars. In a contest between Astro—basically future Scooby-Doo—and the robot dog, ‘Lectronimo performed all classic dog tasks better, but with zero personality. The machine ended up a farcical hunk of equipment, a laugh line for both the Jetsons and the audience. Robots aren’t menaces, they’re silly.

That’s how we have imagined the robot dog, and animaloids in general, for much of the 20th century, according to Jay Telotte, professor emeritus of the School of Literature, Media, and Communication at Georgia Tech. Disney’s 1927 cartoon “The Mechanical Cow” imagines a robot bovine on wheels with a broom for a tail skating around delivering milk to animal friends. The worst that could happen is your mechanical farm could go haywire, as in the 1930s cartoon “Technoracket,” but even then robot animals presented no real threat to their biological counterparts. “In fact, many of the ‘animaloid’ visions in movies and TV over the years have been in cartoons and comic narratives,” says Telotte, where “the laughter they generate is typically assuring us that they are not really dangerous.” The same goes for most of the countless robot dogs in popular culture over the years, from Dynomutt, Dog Wonder, to the series of cyborg dogs named K9 in Dr. Who.

Our nearly 100-year romance with the robot dog, however, has come to a dystopian end. It seems that every month Boston Dynamics releases another dancing video of their robot SPOT and the media responds with initial awe, then with trepidation, and finally with night-terror editorials about our future under the brutal rule of robot overlords. While Boston Dynamics explicitly prohibits their dogs being turned into weapons, Ghost Robotics’ SPUR is currently being tested at various Air Force bases (with a lovely variety of potential weapon attachments), and Chinese company Xiaomi hopes to undercut SPOT with their much cheaper and somehow more terrifying Cyberdog. All of which is to say, the robot dog as it once was— a symbol of a fun, high-tech future full of incredible, social, artificial life—is dead. How did we get here? Who killed the robot dog?

The quadrupeds we commonly call robot dogs are descendants of a long line of mechanical life, historically called automata. One of the earliest examples of such autonomous machines was the “defecating duck,” created by French inventor Jacques de Vaucanson nearly 300 years ago, in 1739. This mechanical duck—which appeared to eat little bits of grain, pause, and then promptly excrete digested grain on the other end—along with numerous other automata of the era, were “philosophical experiments, attempts to discern which aspects of living creatures could be reproduced in machinery, and to what degree, and what such reproductions might reveal about their natural subjects,” writes Stanford historian Jessica Riskin.

The defecating duck, of course, was an extremely weird and gross fraud, preloaded with poop-like substance. But still, the preoccupation with determining which aspects of life were purely mechanical was a dominant intellectual preoccupation of the time, and even inspired the use of soft, lightweight materials such as leather in the construction of another kind of biological model: prosthetic hands, which had previously been built out of metal. Even today, biologists build robot models of their animal subjects to better understand how they move. As with many of its mechanical brethren, much of the robot dog’s life has been an exercise in re-creating the beloved pet, perhaps even subconsciously, to learn which aspects of living things are merely mechanical and which are organic. A robot dog must look and act sufficiently doglike, but what actually makes a dog a dog?

American manufacturing company Westinghouse debuted perhaps the first electrical dog, Sparko, at the 1940 New York World’s Fair. The 65-pound metallic pooch served as a companion to the company’s electric man, Elektro. (The term robot did not come into popular usage until around the mid 20th century.) What was most interesting about both of these promotional robots were their seeming autonomy: Light stimuli set off their action sequences, so effectively, in fact, that apparently Sparko’s sensors responded to the lights of a passing car, causing it to speed into oncoming traffic. Part of a campaign to help sell washing machines, Sparko and Elektro represented Westinghouse’s engineering prowess, but they were also among the first attempts to bring sci-fi into reality and laid the groundwork for an imagined future full of robotic companionship. The idea that robots can also be fun companions endured throughout the 20th century.

When AIBO—the archetypal robot dog created by Sony—first appeared in the early 2000s, it was its artificial intelligence that made it extraordinary. Ads for the second-generation AIBO promised “intelligent entertainment” that mimicked free will with individual personalities. AIBO’s learning capabilities made each dog at least somewhat unique, making it easier to consider special and easier to love. It was their AI that made them doglike: playful, inquisitive, occasionally disobedient. When I, 10 years old, walked into FAO Schwarz in New York in 2001 and watched the AIBOs on display head butt little pink balls, something about these little creations tore at my heartstrings—despite the unbridgeable rift between me and the machine, I still wanted to try to get to know it, to understand it. I wanted to love a robot dog.